Availability targets are a business decision before they are a technical one. They determine whether transactions settle, cash is dispensed, and trades are executed, and they set the standard for how you design failover, data integrity, and maintenance. The shorthand of “nines” translates directly into allowable within Service Level Agreement (SLA) downtime windows.

|

“Nines” |

Availability, % |

Approx. downtime per year |

Approx. downtime per month |

Achievable for |

|

3 nines |

99.90% |

~8.8 hours |

~43 minutes |

Cloud single AZ deployment |

|

3.5 nines |

99.95% |

~4.38 hours |

21.9 minutes |

Cloud multi‑AZ deployment |

|

4 nines |

99.99% |

~52.6 minutes |

~4.3 minutes |

Cloud multi‑AZ deployment (enhanced) |

|

5 nines |

99.999% |

~5.26 minutes |

~26 seconds |

IBM Mainframe, HPE NonStop |

|

6 nines |

99.9999% |

~31.5 seconds |

~2.6 seconds |

IBM Mainframe, HPE NonStop |

These figures come from widely used uptime calculators and are a common way to align stakeholders on what each additional nine buys you.

However, since the availability measurement interval in cloud services is often five minutes, practical reality is more complex. A brief two‑minute network blip may not count as a full “unavailable” interval. Multiple short breaks in different five‑minute windows will not breach a cloud SLA. Only if the loss of connectivity persists for the entire five‑minute window does that interval count as downtime. If the availability percentage falls below the guarantee in the SLA, the cloud infrastructure subscriber becomes eligible for a service credit, typically a discount for future purchases. This also highlights that measuring extremely high availability, such as five or six nines, poses a challenge for current cloud service providers.

Additionally, cloud maintenance windows are not counted as downtime. For example, some operations that AWS performs transparently, such as moving an EC2 instance within its estate, do not impact the SLA if the operation fails due to application‑level incompatibilities. In other words, issues arising from an application’s inability to handle such changes are not considered a service outage.

What hyperscalers actually commit to

Hyperscalers provide strong building blocks when you design across failure domains, but their stated commitments set a realistic ceiling for infrastructure availability. AWS offers a 99.99 per cent Region‑Level SLA for EC2 when all running instances are deployed concurrently across two or more Availability Zones in the same region. This is clearly described in the EC2 SLA and is the reference level most teams quote for multi‑AZ designs.

Microsoft positions 99.99 per cent uptime for virtual machines when you use Availability Zones, which are physically separate datacentre groups within a region.

Google Cloud’s Compute Engine SLA sets 99.99 per cent for instances deployed across multiple zones in a region on the premium network tier.

These are credible four‑nines building blocks when your architecture spreads compute across zones and your stateful dependencies are also designed for zone‑level resilience.

Why five and six nines remain exceptional

Five to six nines means only minutes or seconds of interruption per year. Sectors such as payments, capital markets, telecom control planes, air transport systems, and critical infrastructure often require this level because even short interruptions can create outsized financial, regulatory, or safety impacts. This is the difference between high availability, which typically means failover that completes quickly, and fault tolerance, which means the system continues operating through component failure without a service break from the user’s perspective.

And this is only true for atomic services. In reality, payment or telecom applications rely on multiple services, and a failure in any one of them can affect client‑facing operations. As a result, if your application depends on ten different services, each with 99.9 per cent availability, the overall availability of the client‑facing service drops to roughly 99.0 per cent.

Additionally, if a cloud provider violates its own SLA, it typically issues a service credit for the affected service only. However, you are still required to pay for all other services that were impacted indirectly by the failure of that single service.

When you move from four to five nines you can no longer rely on quick restarts and regional rerouting alone. You need redundancy at the component and transaction level, rolling maintenance that never interrupts the service, and careful handling of in‑flight state so work is either completed atomically or not started at all.

IBM Mainframe and HPE NonStop as fault‑tolerant cores

Two platform families stand out as references for continuous operations. IBM Z is engineered for mission‑critical transactional workloads in banking and insurance and is positioned for top availability along with performance and security. The latest IBM z17 extends these characteristics and focuses on operational reliability while adding AI capabilities, which shows that the platform development continues with a focus on uninterrupted service for transaction processing.

HPE NonStop systems are described as engineered for the highest availability and are long used for OLTP in payments and telecoms. The platform is presented as fully fault‑tolerant for environments that need continuous business operations.

For workloads that require five to six nines, these platforms provide patterns that have been tested in production for decades.

Both platform lines were designed with self‑healing and zero‑downtime approaches in mind, including pervasive hardware redundancy and non‑disruptive maintenance. In cloud environments this approach is realised via multi‑node clusters with load balancers, containerisation, and Kubernetes management for rolling updates, health‑based rescheduling, and horizontal failover.

Skills and modernisation: COBOL on IBM Z

Much of the IBM mainframe estate is written in COBOL. The skill is now scarce in developer markets, so organisations are exploring translation from COBOL to Java or refactoring to more common stacks. IBM is developing tools to assist such conversion and modernisation paths, which is relevant when planning long‑term operating models and availability targets.

Where the cloud excels, and where it needs help

Public cloud is the right place for elastic, rapidly evolving customer experiences, analytics, and digital channels. Within a region, the zone model and managed services make four nines feasible for well‑designed applications. However, a full service is only as available as its least available dependency. Compute might be at 99.99 per cent, but identity, storage, messaging, and control planes must meet the same bar or your end‑to‑end target will not be met. Designing beyond four nines in cloud usually demands multi‑region active active, idempotent operations, strict versioning of contracts, and data strategies that synchronise with measurable latency budgets. It also requires you to validate behaviour under zone and region failure, and to treat maintenance events as part of the availability budget, not as free time.

Please ensure that the SLA calculation method you define in contracts with your customers aligns with the method your cloud provider uses to calculate its SLAs. Otherwise, in the event of a failure, you, not the cloud provider, may be the one responsible for paying penalties.

Additionally, the cost that is most often underestimated is network traffic. In on‑premise environments, data transfers between services are essentially free, so teams typically have little visibility into actual volumes. In the cloud, however, network charges, especially when combined with high‑availability replications, can quickly become one of the largest items on the bill.

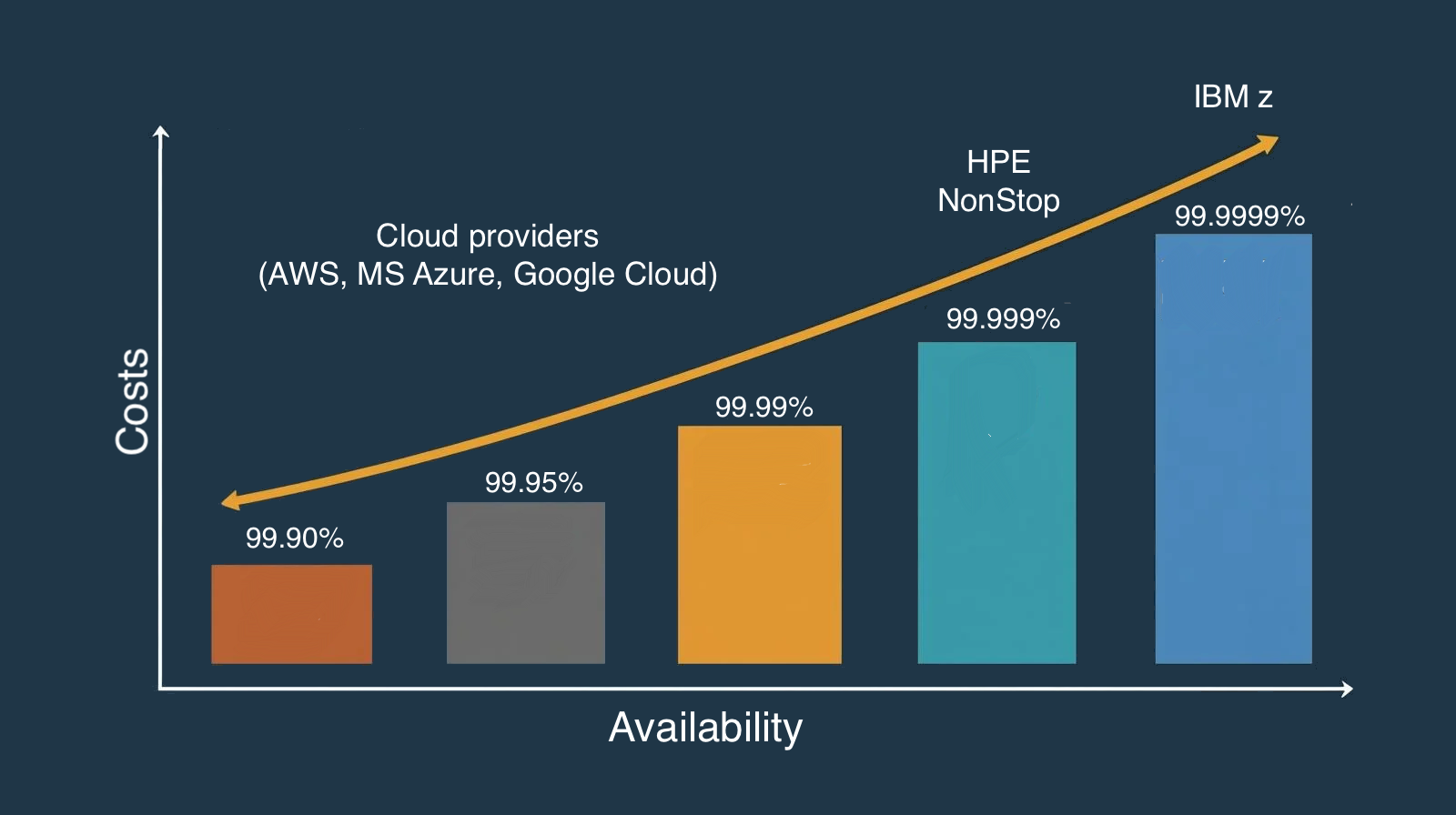

Indicative cost to achieve availability targets

Availability is always a trade‑off with budget. The following bands are directional and assume representative enterprise workloads.

| Availability target | Typical architecture | Indicative CapEx | Indicative OpEx | Cost drivers and notes |

| Three to three‑and‑a‑half nines (99.9%–99.95%) | Cloud, single‑AZ to basic multi‑AZ | Minimal | ~£4k–£40k per month (roughly $5k–$50k) | Depends on VM count, storage class, traffic, and reserved versus on‑demand pricing. Moving from single‑AZ to basic multi‑AZ raises spend due to duplicated capacity and data replication. |

| Four nines (99.99%) | Cloud, enhanced multi‑AZ with zone‑redundant data | Minimal | Higher than 3.5 nines. Uplift of ~20%–60% to steady‑state cloud costs | Zone‑redundant databases, cross‑zone traffic, stronger observability and testing. Cost increase driven by storage replication and egress within or across regions. |

| Five to six nines (99.999%–99.9999%) | Fault‑tolerant cores: IBM Z, HPE NonStop | IBM Z: low millions to eight figures. HPE NonStop: high hundreds of thousands to several millions | IBM Z: low to high hundreds of thousands per year. HPE NonStop: six‑figure OpEx | Software licensing, maintenance, energy, specialist staff. These platforms consolidate reliability into the core and reduce moving parts visible to applications. |

How Digital Bank Expert bridges the divide with Rust

This is the point where architecture meets implementation. Digital Bank Expert designs and delivers hybrid patterns that keep systems of record on platforms that are built for fault tolerance, while allowing cloud services to innovate at speed. We use Rust as a core implementation language for this integration because it brings three important properties together. It delivers performance comparable to C or C++. It enforces memory and thread safety at compile time, which reduces the class of production defects that cause outages. It provides modern tooling for reliable networked services.

In practice this means we build Rust services that upscale cloud availability and may sit between cloud and mainframe, so that customer‑facing channels in cloud can read and write with predictable latency and correctness, while the core remains continuously available. Rust’s strong type system and ownership model help us implement idempotent handlers for retries, back‑pressure in message ingestion, and zero‑copy pipelines where performance margins are tight.

We have found that using Rust significantly improves the practical availability of cloud services. In our projects, we typically expose gRPC and REST APIs in Rust that encapsulate transactional calls into subsystems, while streaming is handled through Kafka or cloud‑native pub/sub platforms where appropriate.

Because Rust compiles to a static binary with minimal runtime, we can deploy the same code across cloud container platforms and edge components, and in some cases target WebAssembly for sandboxed execution where extensibility is needed in a constrained environment. The result is a consistent, testable integration layer that supports the availability target set by the core platform. We pair this with chaos and failover drills that exercise zone and node loss, and we verify that retries and circuit breakers do not exceed the incident budget that corresponds to the chosen number of nines.

Translating nines into a delivery plan

If your target is three nines, many internal systems and some customer journeys can accept occasional short interruptions. Standard regional high availability in cloud, with careful attention to stateful dependencies, can be sufficient.

If you aim for four nines in digital channels, design across zones and use managed services with zone redundancy, then simulate dependency failures to measure end‑to‑end impact against the 4.38‑minute monthly budget.

If you need five nines or better for a payment or trading path, keep the finality of state on IBM Z or HPE NonStop, and place a Rust‑based integration tier between core and cloud to provide a stable, resilient interface. This is the safest way to achieve near‑continuous availability while still benefiting from cloud scale and feature velocity.

Cost and operations should reflect the chosen target. Cloud minimises upfront spend, but each extra nine requires more zones or regions, duplicated data stores, strict change control, and ongoing verification work. Mainframes and NonStop concentrate reliability and reduce the moving parts visible to the application, but they require specialist skills and long‑lived investment. In both models, the availability you design must be supported by operational practice, including maintenance windows that fit within the downtime budget and robust incident response that is measured in seconds rather than minutes when the target demands it.

Digital Bank Expert’s role

Digital Bank Expert brings reference architectures and production‑grade Rust components that turn these principles into systems that run. We help you choose the right “nine” for each journey, anchor your design in the real SLAs that providers publish, and implement the seam between cloud and core so that innovation can proceed without compromising continuous operations. The combination of fault‑tolerant cores, cloud elasticity, and Rust for safe high‑performance integration is a practical path to reliability that matches the real risks in banking and payments.

Bibliography

- https://downloadtime.org/tech-calculators/uptime-calculator

- https://hyperping.com/99.999

- https://uptime.is/99.999

- https://hostdean.com/tools/uptime-calculator/six-nines/

- https://aws.amazon.com/compute/sla/?did=sla_card&trk=sla_card

- https://azure.microsoft.com/en-us/explore/global-infrastructure/availability-zones

- https://cloud.google.com/compute/sla

- https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-regions-availability-zones.html

- https://cloud.google.com/architecture/framework/reliability/design-scale-high-availability

- https://newsroom.ibm.com/z17

- https://www.ibm.com/products/z

- https://www.hpe.com/us/en/compute/nonstop-servers.html

- https://www.shadowbasesoftware.com/wp-content/uploads/Engineered-for-the-Highest-Availability-HPE-NonStop-Family-of-Systems.pdf

- https://www.ibm.com/products/watsonx-code-assistant-z